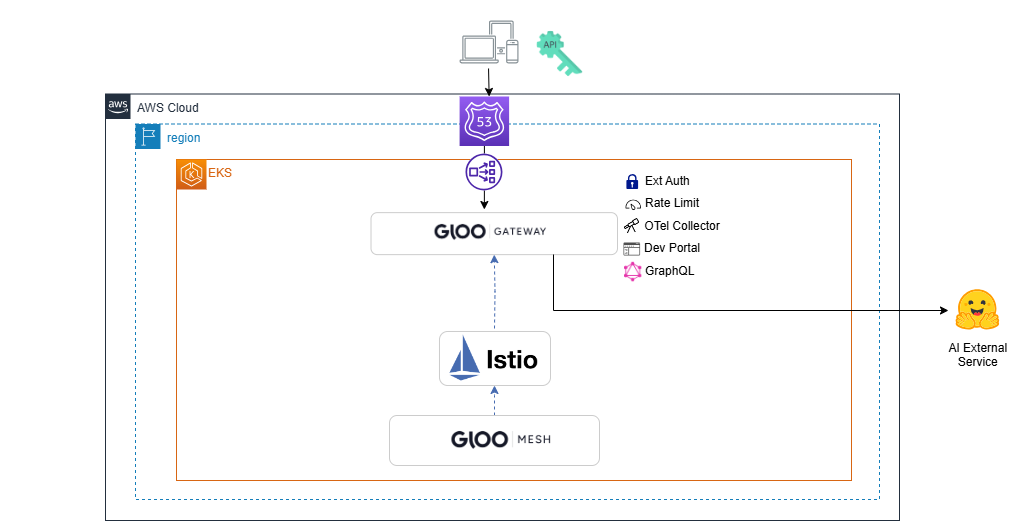

Lab 4 - AI Integration

-

TODO: Text

kubectl apply -f - <<EOF apiVersion: networking.gloo.solo.io/v2 kind: ExternalService metadata: name: huggingface-api namespace: online-boutique spec: hosts: - api-inference.huggingface.co ports: - name: https number: 443 protocol: HTTPS clientsideTls: {} EOF -

TODO: Text

kubectl apply -f - <<EOF apiVersion: networking.gloo.solo.io/v2 kind: RouteTable metadata: name: direct-to-huggingface-routetable namespace: online-boutique spec: workloadSelectors: [] http: - name: demo-huggingface labels: route: huggingface matchers: - uri: prefix: /huggingface forwardTo: pathRewrite: /models/openai-community/gpt2 hostRewrite: api-inference.huggingface.co destinations: - kind: EXTERNAL_SERVICE ref: name: huggingface-api port: number: 443 EOF

export HF_API_TOKEN=“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”

curl https://api-inference.huggingface.co/models/openai-community/gpt2

-X POST

-d ‘{“inputs”: “Write me a 30 second pitch on why I should use an API gateway in front of my LLM backends”}’

-H ‘Content-Type: application/json’

-H “Authorization: Bearer ${HF_API_TOKEN}”

curl http://$GLOO_GATEWAY/huggingface

-X POST

-d ‘{“inputs”: “Write me a 30 second pitch on why I should use an API gateway in front of my LLM backends”}’

-H ‘Content-Type: application/json’

-H “Authorization: Bearer ${HF_API_TOKEN}”

-

TODO: Text

kubectl apply -f - <<EOF EOF -

TODO: Text

kubectl apply -f - <<EOF EOF -

TODO: Text

kubectl apply -f - <<EOF apiVersion: networking.gloo.solo.io/v2 kind: ExternalService metadata: name: openai-api namespace: online-boutique spec: hosts: - api.openai.com ports: - name: https number: 443 protocol: HTTPS clientsideTls: {} EOF -

TODO: Text

kubectl apply -f - <<EOF apiVersion: networking.gloo.solo.io/v2 kind: RouteTable metadata: name: direct-to-openai-routetable namespace: online-boutique spec: workloadSelectors: [] http: - name: catch-all labels: route: openai matchers: - uri: prefix: /openai forwardTo: pathRewrite: /v1/chat/completions hostRewrite: api.openai.com destinations: - kind: EXTERNAL_SERVICE ref: name: openai-api port: number: 443 EOF -

TODO: Text

curl https://$GLOO_GATEWAY/openai -H "Content-Type: application/json" -H "Authorization: Bearer $OPENAI_API_KEY" -d '{ "model": "gpt-3.5-turbo", "messages": [ { "role": "system", "content": "You are a solutions architect for kubernetes networking, skilled in explaining complex technical concepts surrounding API Gateway and LLM Models" }, { "role": "user", "content": "Write me a 30 second pitch on why I should use an API gateway in front of my LLM backends" } ] }'- TODO: Text

curl https://api.openai.com/v1/chat/completions -H "Content-Type: application/json" -H "Authorization: Bearer $OPENAI_API_KEY" -d '{ "model": "gpt-3.5-turbo", "messages": [ { "role": "system", "content": "You are a solutions architect for kubernetes networking, skilled in explaining complex technical concepts surrounding API Gateway and LLM Models" }, { "role": "user", "content": "Write me a 30 second pitch on why I should use an API gateway in front of my LLM backends" } ] }'

export GLOO_GATEWAY=$(kubectl -n gloo-mesh-gateways get svc istio-ingressgateway -o jsonpath=’{.status.loadBalancer.ingress[0].*}’) printf “\n\nGloo Gateway available at http://$GLOO_GATEWAY\n”

curl http://$GLOO_GATEWAY/openai -H “Content-Type: application/json” -H “Authorization: Bearer $OPENAI_API_KEY” -d ‘{ “model”: “gpt-3.5-turbo”, “messages”: [ { “role”: “system”, “content”: “You are a solutions architect for kubernetes networking, skilled in explaining complex technical concepts surrounding API Gateway and LLM Models” }, { “role”: “user”, “content”: “Write me a 30 second pitch on why I should use an API gateway in front of my LLM backends” } ] }’